Mehrdad Farahani

PhD candidate at Chalmers & University of Gothenburg

I’m doing research on how large language models work under the hood — tracing and editing their internal representations to make them more interpretable and controllable. I focus on mechanistic interpretability, causal analysis, and behavior editing in LLMs.

Curious about how LLMs really think? Let’s dig in.

💬 Interested in thesis supervision? I supervise projects related to NLP, mechanistic interpretability, and LLM behavior analysis. Check available topics or send me an email.

News

| Jul 07, 2025 | 2025 Master's Thesis Supervision Open |

|---|---|

| Feb 16, 2024 | 2024 Master's Thesis Supervision Open |

Selected Publications

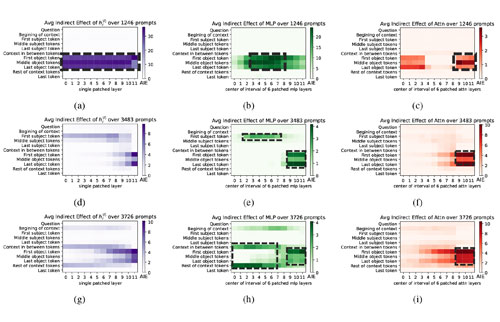

- Deciphering the Interplay of Parametric and Non-Parametric Memory in RAG ModelsIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Nov 2024